An AI Chatbot for the Maldivian Travel Industry

An Introduction to AI Chatbots

However, GPT is different from these rule-based chatbots in several ways. GPT uses a powerful AI technology known as generative language models, which allows it to understand and generate responses based on context, rather than just following a set of predefined rules. Unlike rule-based chatbots, which are limited to responding to pre-defined prompts, ChatGPT can generate human-like responses to a wide range of inputs, making it much more flexible and versatile.

While traditional chatbots are designed to respond to specific prompts or keywords, GPT is designed to understand the user’s intent and generate a relevant response based on that intent. This makes it much more capable of handling complex and varied conversations, and provides a much more natural and engaging experience for users.

GPT: The New Kid in Town

ChatGPT has demonstrated impressive capabilities such as understanding the nuances of human language, generating coherent and context-aware responses, and being able to carry on a natural-sounding conversation with humans. This is because ChatGPT is trained on vast amounts of text data, allowing it to learn the nuances of human language and provide human-like responses to user queries.

The power and potential of GPT, generative AI, and language models are vast. With these technologies, businesses can automate customer service and support, provide personalized recommendations, and generate content at scale. GPT can be trained on specific domains and used to generate content such as product descriptions, email templates, and even news articles.

In the travel industry, for example, GPT could be used to provide personalized recommendations to travelers, help them plan their trip, answer their questions about destinations, and even assist them in booking flights and accommodations. The power and potential of GPT, generative AI, and language models are only just beginning to be fully understood and explored, and we can expect to see even more innovative use cases in the future.

Prompt Engineers: Rise of a New Field

The work of a prompt engineer involves designing, testing, and refining prompts to ensure that the language model generates high-quality responses that are accurate and relevant to the user’s input. This requires a lot of creativity, as prompt engineers must come up with different ways to phrase questions and prompts to guide the language model’s responses. Prompt engineers must be able to analyze and interpret data to refine and improve the prompts. They must also work closely with developers to integrate the prompts into the chatbot or other AI-powered system.

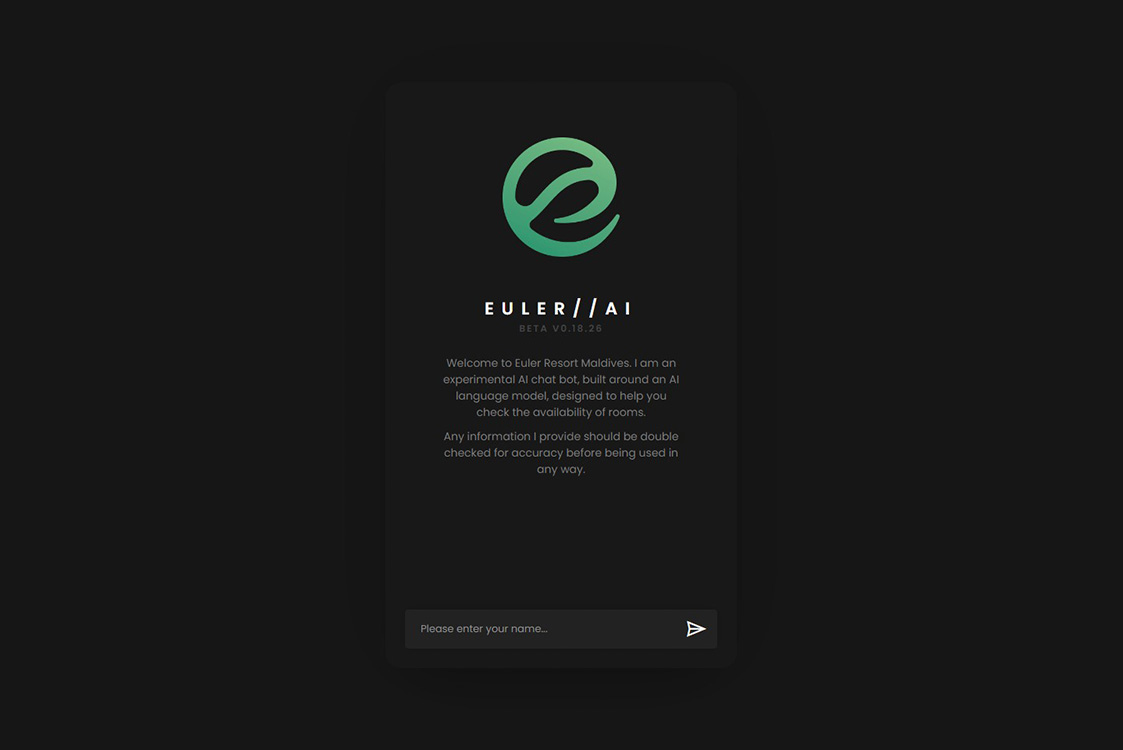

Proof of Concept

The first layer is called the Compliance layer, which serves as a sort of quality control mechanism. It checks both the input from the user and the output from the bot to ensure that they are relevant to the conversation and consistent with the bot’s purpose. The Compliance layer also checks the inputs and outputs against a predefined set of rules of engagement to make sure that the conversation is following a set of guidelines.

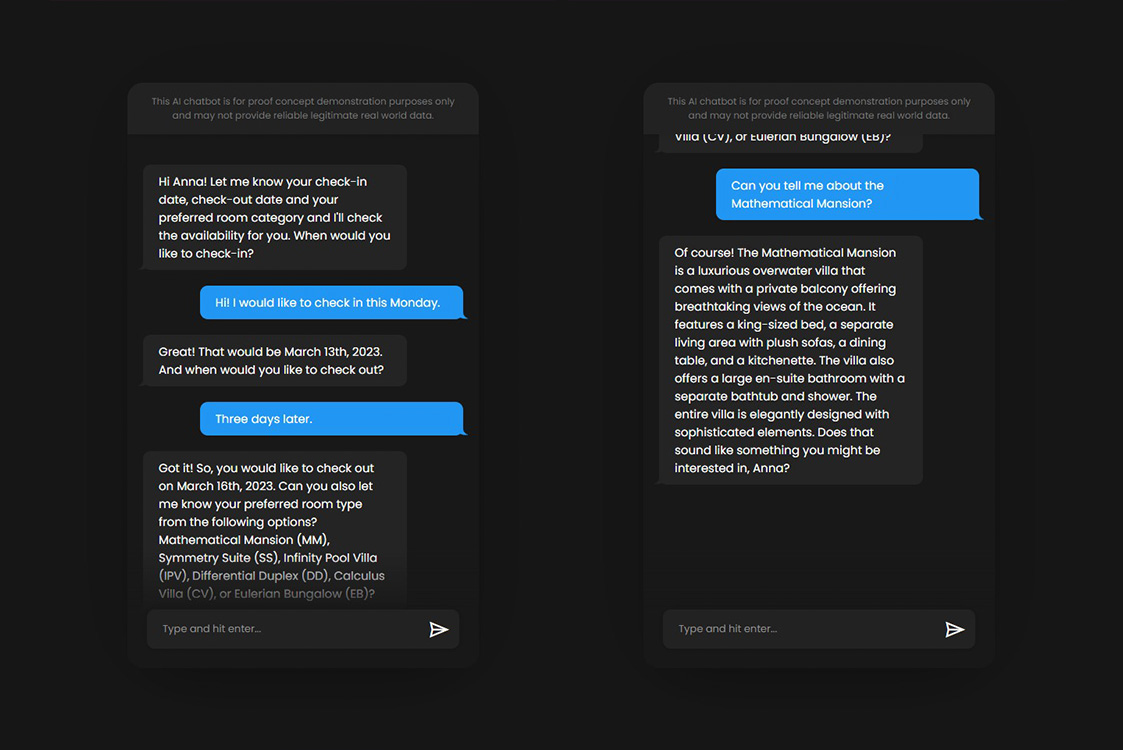

The Conversation layer is the main chatbot that converses with the user. This layer takes in the user’s inputs and generates responses based on the bot’s programming. The responses are intended to continue the conversation and provide relevant information or assistance to the user.

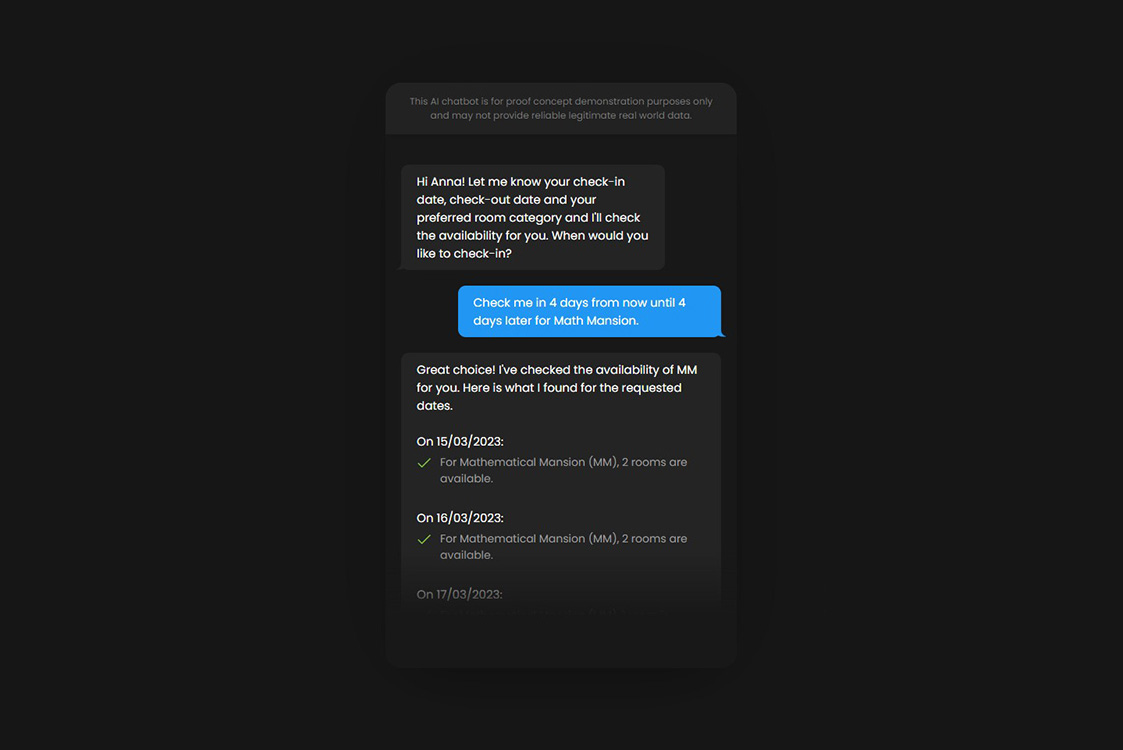

The third layer is the Classification layer. This layer is responsible for identifying the user’s intent and the relevant parameters of their request. For example, if the user is trying to book a hotel room, the Classification layer would identify the check-in date, check-out date, and preferred room type as the parameters needed to fulfill the request. Once the Classification layer has identified the parameters, it returns them in a format that the program can use.

The fourth and final layer is the Conversion layer. This layer takes in raw data from an API and generates a response based on the parameters identified by the Classification layer. For example, if the user is trying to book a hotel room, the Conversion layer would use the identified check-in and check-out dates, as well as the preferred room type, to generate a response that includes available rooms and their prices.

Although it works well for this purpose, there are a couple of disadvantages to this method. One of these is the higher token costs and the higher latency due to multiple API calls. GPT 4 is just around the corner and is expected to me much more powerful. Perhaps a better mechanism can be developed with GPT 4. A better mechanism would be to use a fine tuned model. By taking pre-trained GPT-3.5-Turbo language model and further training it on a specific dataset would allow it to adapt to the task better. However as of the time of this experiment, you can only fine-tune base GPT-3 models.

This project has shown the potential of GPT and chatbots to enhance user experiences in various industries. We look forward to exploring further possibilities and helping our clients leverage the power of AI in their businesses.

Would you like to try it out?

Fill in the form below and click proceed, to try out the Chatbot. Keep in mind that this is just a prototype proof of concept implementation of Open AI’s GPT 3.5, and is imperfect and prone to mistakes. However, GPT 4 is just around the corner and is expected to be 500 times more powerful. Once GPT 4 is released, we’ll be trying that out as well.